To test or not to test? It isn’t even a question! You should be integrating testing into your email strategy at all times. Your audience is constantly changing, and you need to change with them to continue engaging successfully.

A/B Testing Best Practices

Identify Your Goals

Ensure you’re discussing what goals are important to your team and why. Set those goals by using a hypothesis to justify the variation. Simply put, if we change X, we think it will increase Y. We want to raise our click through rate, so we are going to change the color of our CTA buttons because we believe that will entice more people to click.

Ask your team these questions to hone in on your goals:

- What are your business KPIs?

- What are your email KPIs?

- What email metrics do you want to improve?

- Have you identified your best/worst performing emails so far?

- What testing have you done so far?

Build a Plan

Planning is an extremely important piece your team needs to address before you begin your testing journey. Without a plan, you’ll be testing things at random, and that won’t give you quality data. Consider these factors to develop your plan:

- Test one thing at a time. Too many variables are introduced if you’re testing multiple things at the same time, and it will be hard to determine which change is causing a positive response.

- Always use a control group. Make sure you send about 10% of your audience the regular/historical version of your email. This way, you will have a control group to compare the test version to.

- Take targeting into account. Think about your audience personas and what will appeal to them – layout, copy, tone of voice etc.

Review the Results

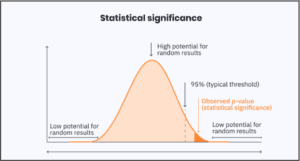

When reviewing your results, you’ll first want to make sure your results are statistically significant before declaring a winner. Did the change you made really cause enough of a statistical improvement for you to enact this change in future campaigns?

Here’s a breakdown of the elements of statistical significance in more detail:

The P-value: This is the probability value. The smaller the P-value, the more reliable the results, and 5% is the standard for confirming statistical significance.

Sample size: If a data set is too small, the results might not be reliable.

Confidence level: The amount of confidence you have that the results didn’t occur by chance.

The typical confidence level for statistical significance is 95%.

Taking all of the above into consideration, to make sure your test is providing valuable information, your sample size needs to be large enough to be reliable, and 3-5% should be the target difference to indicate a significant change.

Repeat your testing after an amount of time to solidify that the changes you made are still valuable. The amount of time in between tests will depend on your testing strategy. If your strategy is very comprehensive, we would suggest testing again after about a year. If you’re performing a quick test or the results of your test were very close, try re-testing after 6 months or sooner. Remember that your audience is constantly changing. You want to make sure what you’ve changed is still working for your audience. Testing never ends!

Surprise your audience with innovation! Vary the elements you test and also consider the other types of communication you utilize – SMS, push notifications, direct mail, etc. Look to see if one form of communication is better for a specific campaign than another.

Ask your audience for feedback. Statistics are great, but getting feedback straight from your audience is even better!

And finally, be patient – testing takes time and a lot of variations. Don’t rush, and test things with intent.

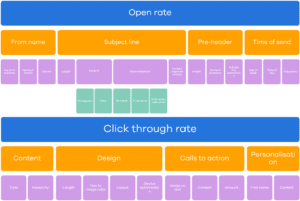

Crafting Your Testing Strategy

As we talked about previously, creating a plan is essential to your testing strategy, and your goals will determine the path of your plan. For example, if you are looking to increase open rate, you can focus on testing your from name, subject line, pre-header text, or the time of send. If you are looking to increase click through rate, you would focus on content, design, calls to action, and personalization.

Use some of these tactics to test various aspects of your email to see what works best for your audience:

Subject Line Testing Ideas

- Length: longer/shorter

- Emojis: used or not

- Messaging: cryptic or direct

- Tone: serious or humorous / Intriguing or straightforward

- Personalization (first name/product)

- CTA in subject line

- Statement or Question

CTA Testing Ideas

- Button Color

- Button Shape

- Button Size

- Button Location

- Personalized CTA

- Wording: Download, Click, Buy or Get, Learn, Discover

Content Testing Ideas

- Image or Text Heavy

- Salutation: personalized or generic / formal or relaxed

- Copy length

- Bullet points or flowing paragraphs

- Videos or no videos

- Hard or soft Sell

- Background Color

- Color Schemes

- Typography

- Logo Placement

- Logo Size

Other Testing Ideas

- Time of Day

- Day of Week

- Plain Text or HTML

- Image or Text Heavy

- Positive vs. negative pitch

- Closing Line/sign off

- Sender profile

Continuously testing a reshaping how you engage with your audience will drive even more success within your email program. If you need any help starting or adjusting your strategy, reach out to your CSM and they or our Strategy Consultants can assist!