We all wish we did more testing, but we don’t. And when we do, we’re sometimes unsure of what the results mean. The data is just never as magical as we think it’s going to be – amiright?

Why is that? More often than not, we test small changes that are either inconsequential or only consequential at a massive scale. If we test the color of a green CTA vs a blue CTA, the results are likely to be inconclusive unless we’re sending to millions of people.

So how do we make tests that show meaningful results? We need to test bigger changes.

Use distinctly different approaches that are designed to challenge your assumptions. Let me show you what I’m talking about — here’s my recommended pattern for testing a new newsletter or email type.

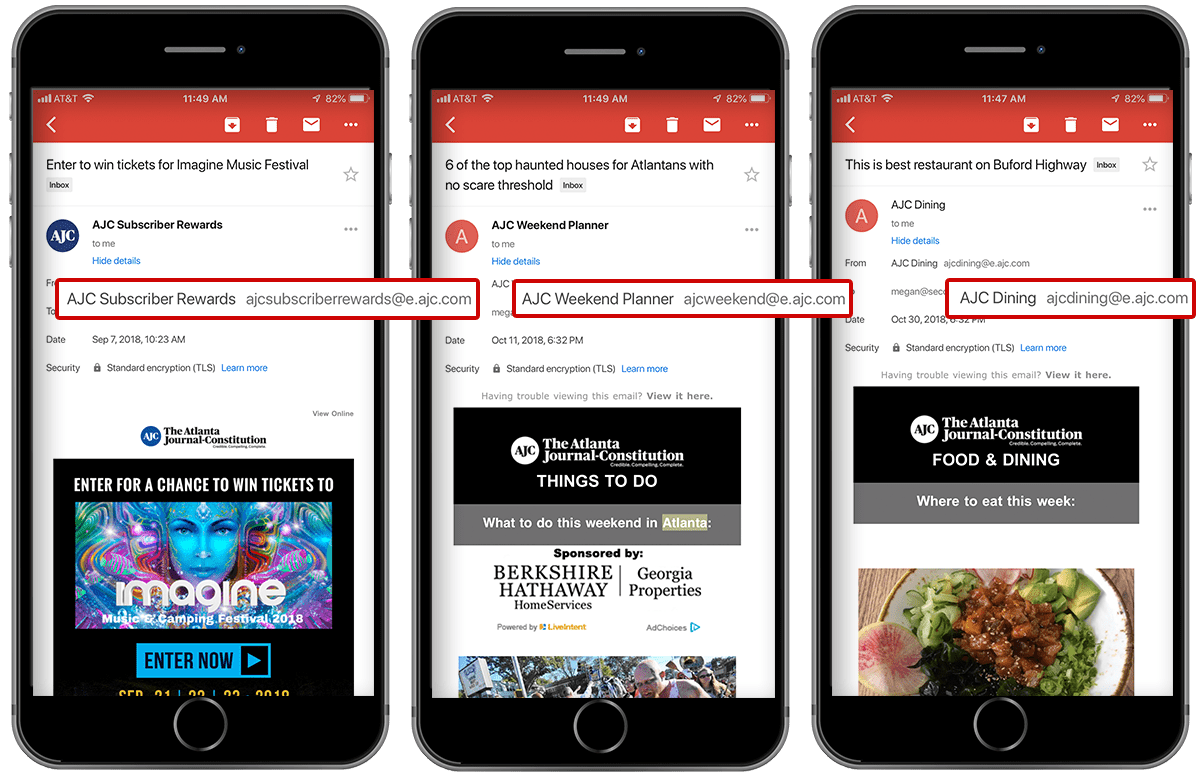

First, test your ‘From Name’

Did you know From Names are the #1 factor in open rates? It’s true. Test a few distinct variants on your from name before you begin testing your subject lines. Test from a person, test from an organization, test with both (like “Tim at Secondstreet”). Look at your open rates, then pick a winner and stick with it.

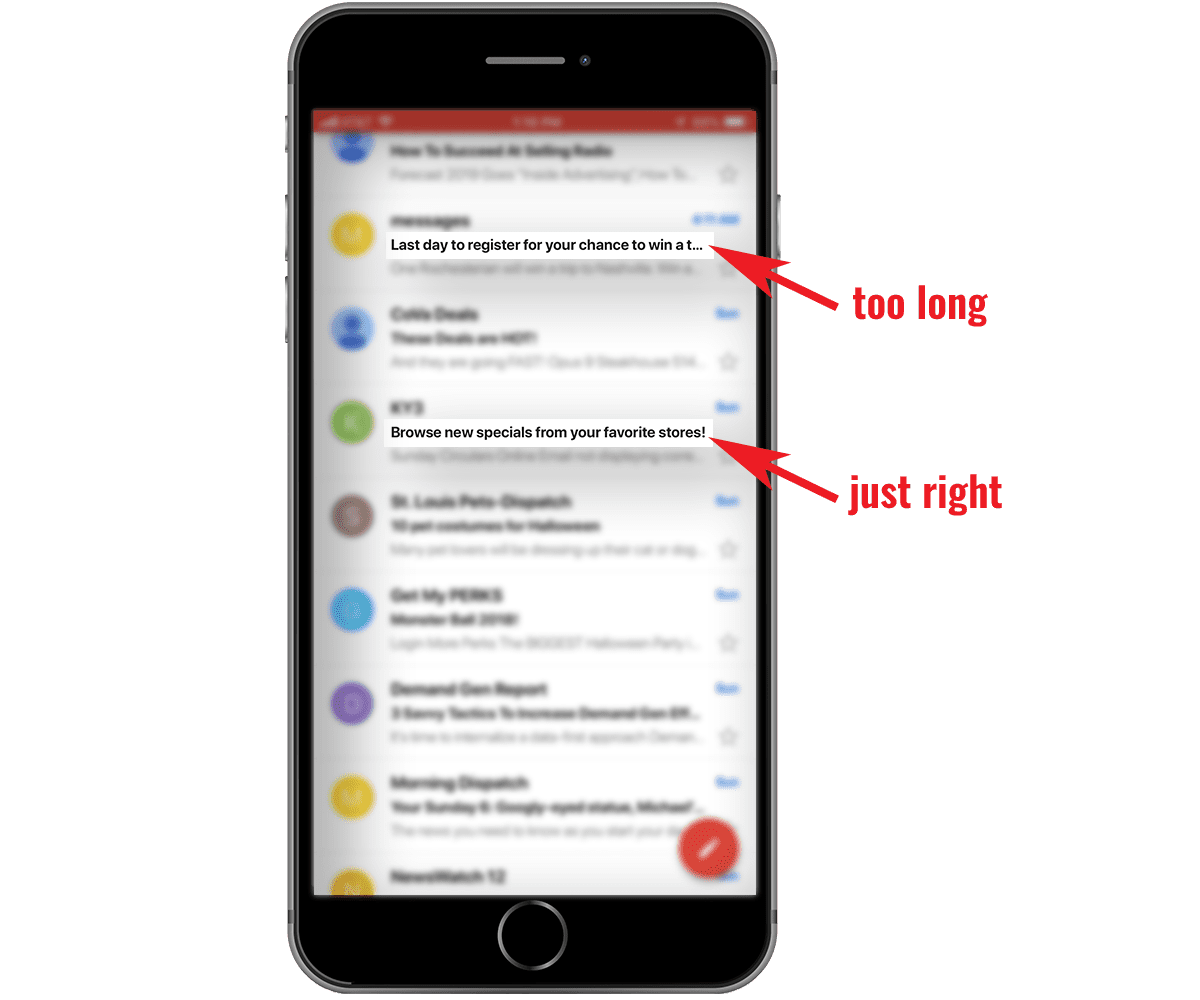

Second, test subject line formats

Content companies can’t be in the business of testing their newsletter subject lines constantly — it’s a huge resource drain. So try testing formats – content synopsis, individual headlines, dates, issue numbers. Test it all, and test it multiple times to make sure you’re getting a clear signal. Then, pick a winner based on open rate and move on.

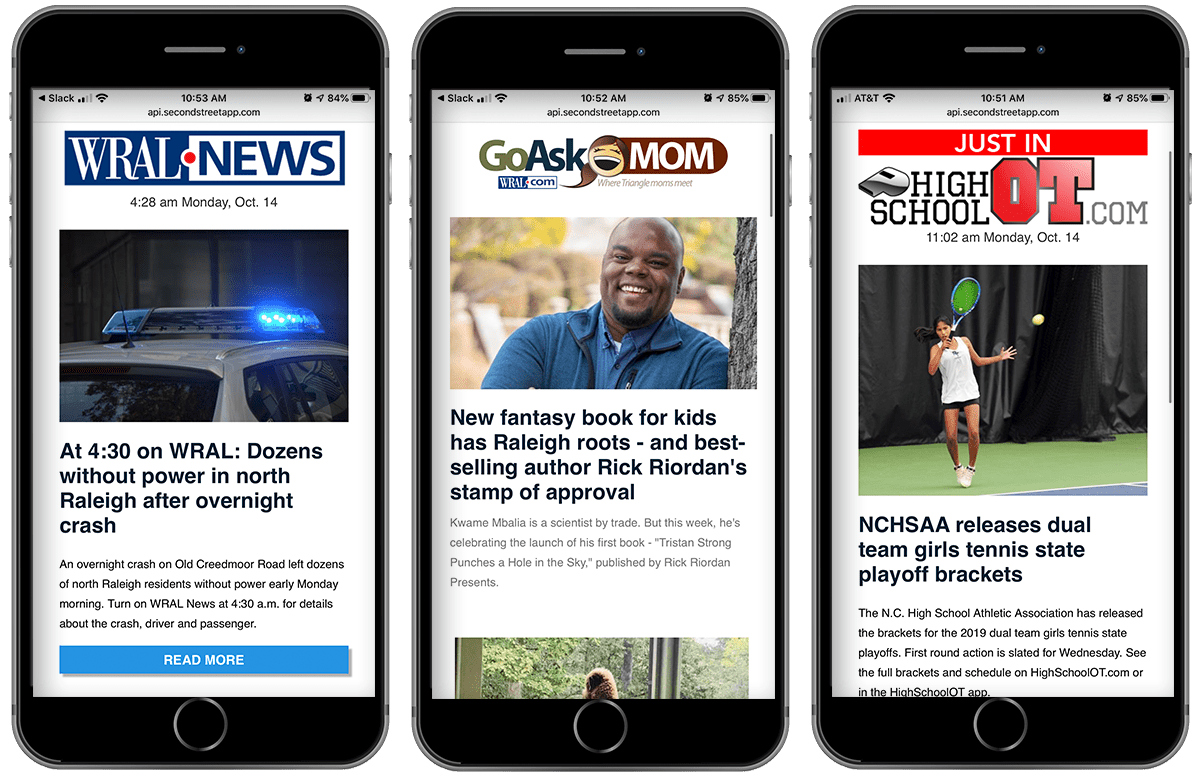

Finally, test your layouts

There’s a lot to test here, but be reasonable. Pick 3–4 layouts/approaches that you like and run tests for a week. Pick your winner based on click-to-open rate (divide unique clicks by the number of unique opens) so that you’re comparing things fairly.

Test these three ways in order, methodically, over the course of 2–3 weeks. If you see a dip in engagement after a few months, repeat the second and third steps to see if you can gain back engagement.