Introduction

As everyone rushes to implement an AI solution grounded in their knowledge, understanding key technical concepts can mean the difference between success and failure. Here are seven critical terms I believe everyone should be aware of to successfully plan their AI knowledge delivery strategy.

1. Context Window

AI agents offer numerous benefits to businesses and individuals. Here are some of the key advantages:

What It Is: Think of a context window as your AI’s working memory – it’s the amount of text your AI model can consider at once when generating responses.

Why It Matters: Most knowledge content wasn’t designed with context windows in mind. An average knowledge article of 5,000 words can consume 75-100% of a standard context window, leaving little room for:

- The actual questions

- Conversation history

- Additional supporting information

- Response Generation

Business Impact: Oversized content can lead to:

- Slower response times

- Higher operating costs

- Less accurate answers

- Poor user experience

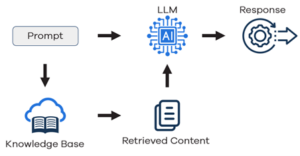

2. RAG (Retrieval Augmented Generation)

What It Is: : RAG is a technique that enhances AI responses by retrieving relevant information from your knowledge base before generating answers.

Why It Matters: RAG is crucial because it:

- Grounds AI responses in your approved knowledge content

- Reduces hallucinations (AI making things up)

- Ensures compliance with your policies

Business Impact: Proper RAG implementation can:

- Reduce support costs

- Improve answers accuracy

- Increase customer satisfaction

- Decrease escalations

3. Vectorization & Vector Emeddings

What They Are: : Vectorization is the process of converting text into numerical representations that AI can understand and compare. Vector embeddings are the resulting mathematical representations – essentially the “coordinates” that position your content in a multidimensional space of meaning.

Think of vectorization as the recipe and process of cooking, while vector embeddings are the finished dish. You need both to make your knowledge discoverable by AI.

Why It Matters: Traditional keyword search is like trying to find a book in a library using only exact title matches. Vectorization and embeddings together allow:

- Semantic understanding (finding content by meaning, not just keywords)

- Similarity comparisons (finding related content)

- Context-aware retrieval (understanding user intent)

- Multilingual capabilities (connecting meaning across languages)

Business Impact: Poor implementation of either can result in:

- Missed knowledge matches costing support opportunities

- Irrelevant responses frustrating users

- Higher processing costs from inefficient matching

- Inconsistent answer quality

4. Knowledge Chunking

What It Is: : The process of breaking down large knowledge documents into smaller, AI-optimized pieces.

Why It Matters: Proper chinking is essential for:

- Efficient context window usage

- Faster response times

- More precise answers

- Better resource utilization

Business Impact: Optimized chunks (around 100 words) versus traditional full articles (5,000+ words) can:

- Significantly reduce API costs

- Improve response speed

- Increase answer accuracy

- Enable better conversation flow

5. Metadata Enrichment

What It Is: : The process of adding structured information to your knowledge content to improve AI understanding and retrieval.

Why It Matters: Rich metadata helps:

- Guide AI in understanding content context

- Improve search accuracy

- Enable better content filtering

- Support personalization

Business Impact: Proper metadata enrichment can:

- Reduce incorrect responses

- Improve content maintenance efficiency

- Enable better analytics

- Support multi-language deployment

6. Token Economics

What It Is: : Tokens are the basic units that AI models use to process text – words, parts of words, or even punctuation marks. Token economics refers to managing how these units are used and their associated costs.

Why It Matters: Token usage directly impacts:

- Operating costs

- Response speed

- System performance

- Content optimization strategy

Business Impact: Poor token management can:

- Increase API costs by 200-300%

- Slow down response times

- Waste context window space

- Reduce system efficiency

For example: A traditional 5,000-word knowledge article might cost 15-20 cents to process, while an optimized 100-word chunk might cost only 1-2 cents. Over millions of interactions, this difference becomes significant!

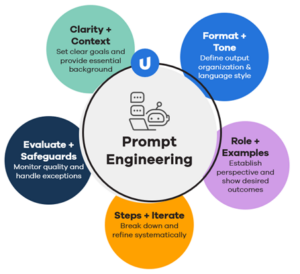

7. Prompt Engineering

What It Is: : The art and science of creating instructions for AI models to get optimal responses. This includes how you format queries, provide context, and guide the AI’s output.

Why It Matters: Effective prompt engineering ensures:

- Consistent response formats

- Accurate information delivery

- Appropriate tone and style

- Efficient token usage

Business Impact: Well-designed prompts can:

- Reduce incorrect responses

- Improve customer satisfaction

- Lower operating costs

- Increase first-time resolution rates

Conclusion

Success in AI knowledge delivery isn’t just about having the right technology – it’s about understanding how to prepare and structure your knowledge for AI consumption. These seven terms represent the fundamental concepts that will help you avoid common pitfalls and build a solid foundation for your AI initiatives.

Remember: The quality of your AI’s responses will only be as good as your knowledge foundation! Understanding these concepts will help you make informed decisions about your knowledge transformation strategy.

Want to learn more about optimizing your knowledge for AI delivery? Contact us to discuss your specific challenges and requirements today.